Statement: This series of post records the personal notes and experiences of learning the BiliBili video tutorial “Pytorch 入门学习”, most of code and pictures are from the courseware PyTorch-Course. All posted content is for personal study only, do not use for other purposes. If there is infringement, please contact e-mail:yangsuoly@qq.com to delete.

1. Introduction to deep learning models

1.1 Definition

Q: What is machine learning?

A: Study of algorithms that:

- Improve their performance P

- At some task T

- With experience E

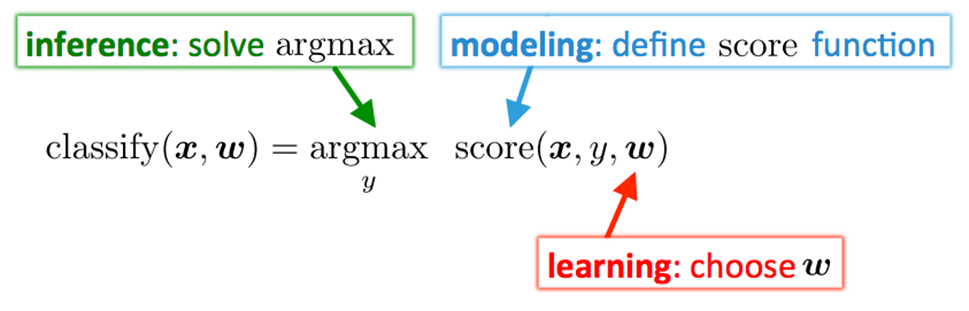

Conclusion: Modeling, Inference, learning

- Modeling: define score function

- Inference: solve argmax

- Learning: choose w

Q: What is deep learning?

A:

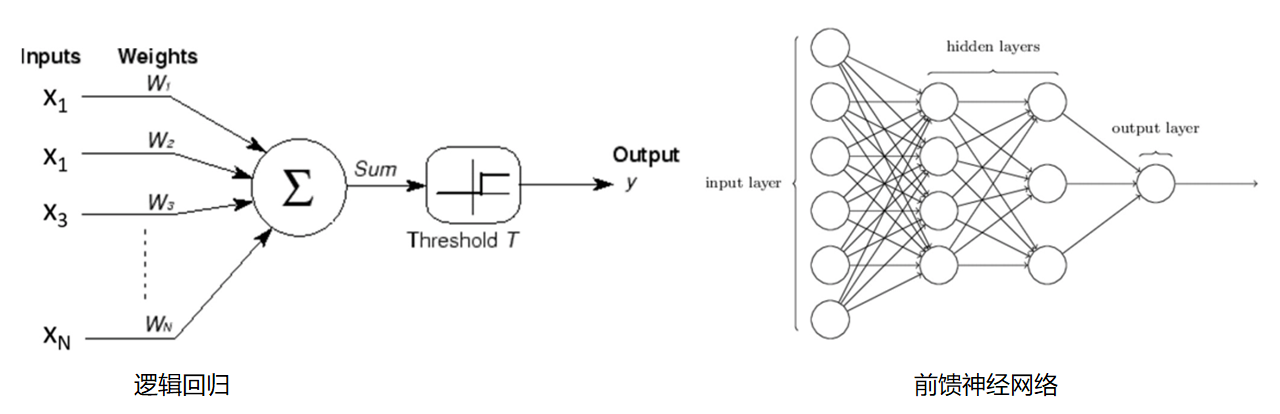

Q: What is neural network?

A:

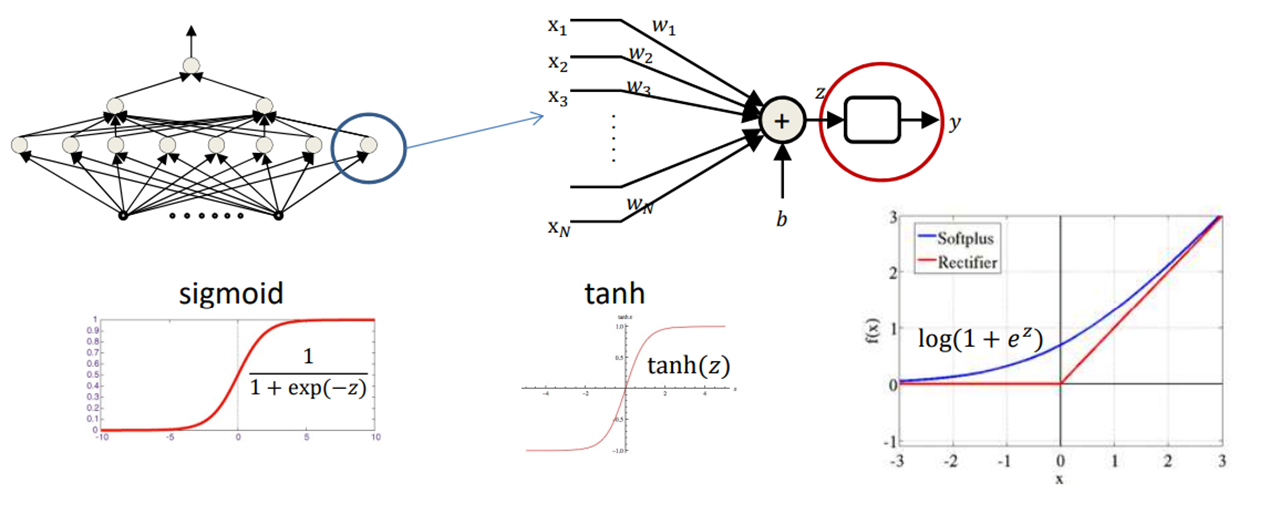

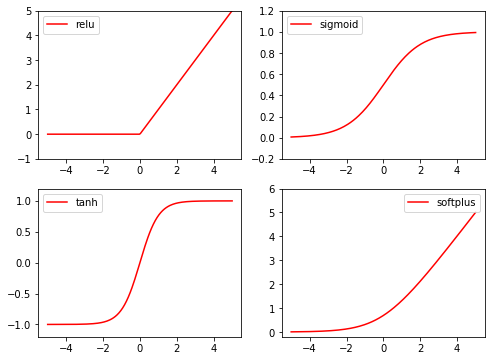

1.2 Activation function

1.2.1 Commonly used activation functions:

- :

- :

- :

- :

1.2.2 Code implementation

1 | import torch |

Result:

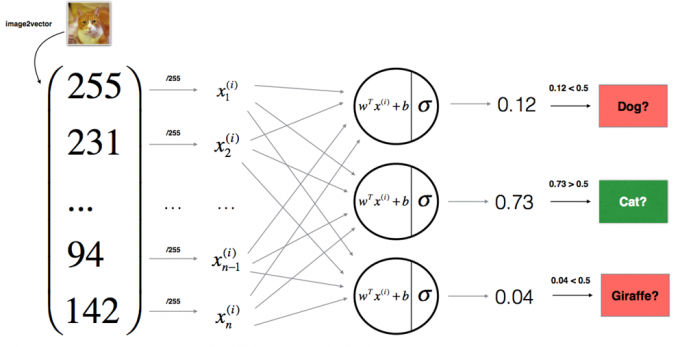

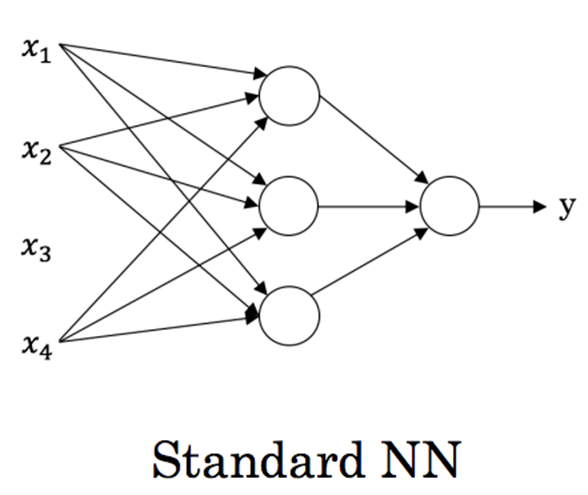

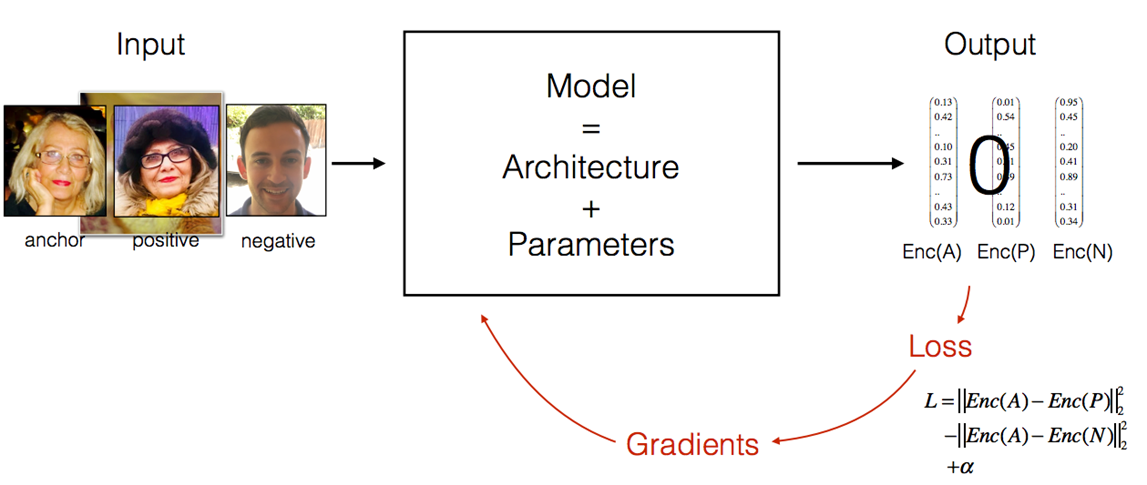

1.3 examples of NN

-

Standard feedforward NN

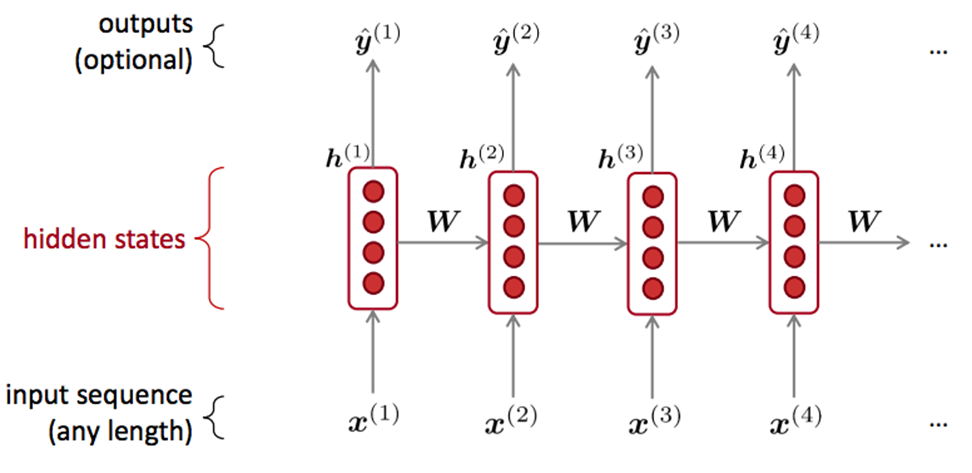

-

Convolutional NN

-

Recurrent NN

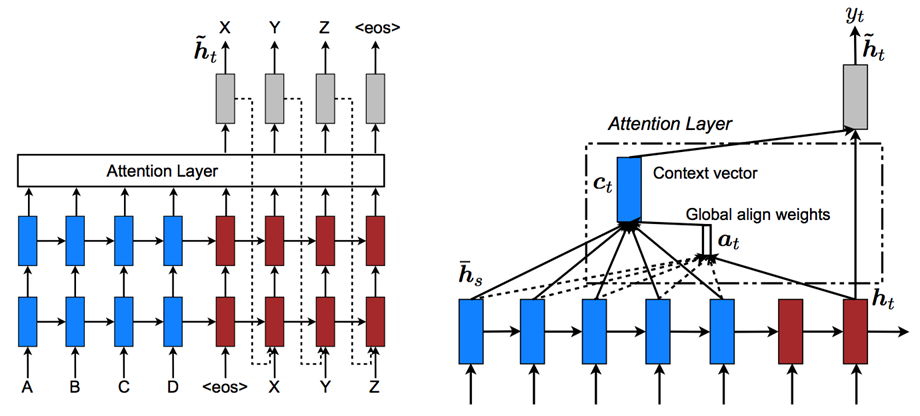

-

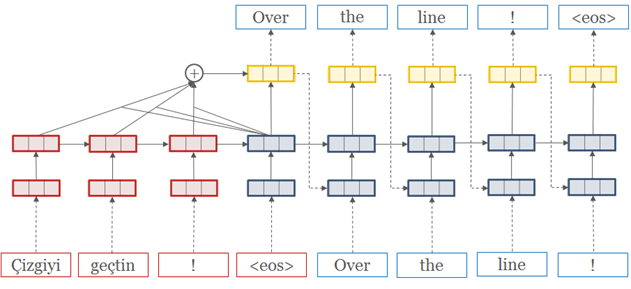

Seq2Seq with Attention

Reference: Effective Approaches to Attention-based Neural Machine Translation

2. Introduction to PyTorch

2.1 Framework for deep learning

Difference between PyTorch and Tensorflow:

-

PyTorch: 动态计算图 Dynamic Computation Graph

-

Tensorflow: 静态计算图 Static Computation Graph

PyTorch 代码通俗易懂,非常接近 Python 原生代码,不会让人感觉是完全在学习一门新的语言。拥有 Facebook 支持,社区活跃。

Q: What does the PyTorch do?

A:

2.2. Some interesting project with PyTorch

-

ResNet

Image classification: ResNet -

Object Detection

Project address: Here -

Image Style Transfer

Project address: Here -

CycleGAN

Project address: Here -

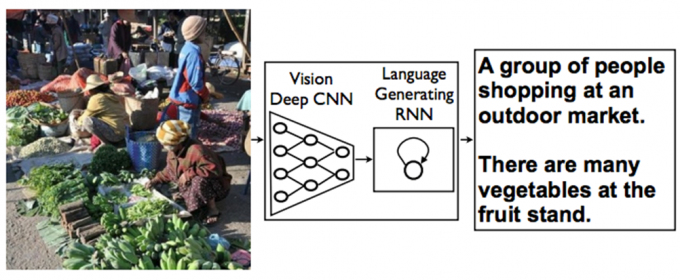

Image Captioning

Project address: Here -

Sentiment Analysis

Project address: Here -

Question Answering

Project address: Here -

Translation: OpenNMT-py

Project address: Here -

ChatBot

Project address: Here -

Deep Reinforcement Learning

2.3 How to learn PyTorch

- Basics of deep learning;

- Pytorch official tutorial;

- Learn tutorials on GitHub and various blogs;

- Documentation and BBS

- Re-creat the open source PyTorch project;

- Read papers about deep learning model and implement them;

- Create your own model.

3. Note content

- Pytorch framework with autograd introduction, simple forward neural networks;

- Word vector;

- Image classification, CNN, Transfer learning;

- Language Model, Sentiment Classification, RNN, LSTM, GRU;

- Translation Model, Seq2Seq, Attention;

- Reading Comprehension, EIMo, BERT, GPT-2;

- ChatBot;

- GAN, Face generation, Style Transfer.